The Definitive Guide to Computer Vision Technology: Exploring Techniques, Types and Applications

Recent Post:

What is Computer Vision?

Computer Vision (CV) is a type of AI technology that allows a machine to interpret and analyze the visual world in its immediate environment based on deep learning models trained by images and videos. It is like human sight, when it detects and identifies objects or anything else more realistic, extracts the features to enable decision support.

CV processes automate visual tasks across a variety of industries, it gets used in applications including automated quality inspection in manufacturing and facial recognition, self-driving cars, construction, automotive systems, security, and medical image analysis.

A Brief History of Computer Vision:

The history of computer vision began in the late 1950s. A study of early experiments about how animals, like cats, processed visual information. After the study it was disclosed that identifying simple shapes was the initial step in understanding images, laying the foundation for future research.

During the period of 1960s, AI became a proper branch of study, scientists began focusing on teaching machines to see like humans.

By the 1970s, computer vision found its first commercial use with optical character recognition (OCR) systems, which helped read printed and handwritten text for visually impaired people.

The 1980s introduced convolutional neural networks (CNNs), a major step forward in image recognition.

In the time period of the 1990s, with the rise of the internet, large image datasets became available, boosting the development of facial recognition technology.

In the 2000s, tagging and organizing visual data became more common, and real-time face recognition took off.

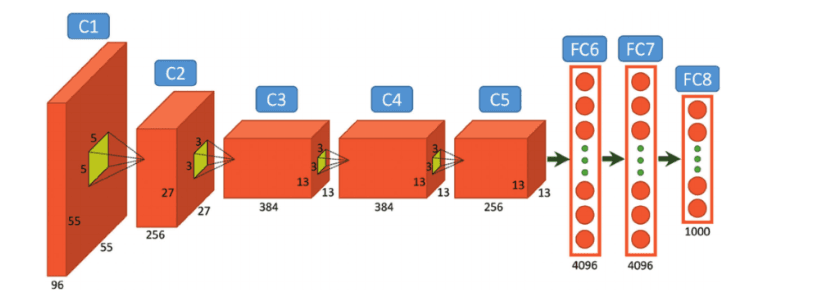

A CNN model called AlexNet established a milestone for computer vision in 2012 by significantly refining image recognition accurately designed by Alex Krizhevsky.

Today, computer vision is growing, thanks to speedy hardware and data, making it even more accurate than human vision in many tasks.

General architecture of the AlexNet Source

How Computer Vision Works?

Computer vision enables computers to interpret visual information likewise to the human visual system. When advanced algorithms are being used, they process and analyze digital images and videos to extract insights. Using pattern recognition, computer vision replicates human image processing.

The ability of algorithms to identify patterns is improved by huge training data and classify images, aiming for reliable object identification across multiple applications. When massive datasets come into training the objects are labeled by systems.

1. Image Acquisition:

A computer vision system uses a camera or sensor to gather photos, movies, or other visual information (such as scans). The process begins with capturing images or video clips from several sources.

Acquisition Images are preprocessed (enhanced) to improve quality involves the following:

Noise Reduction: You want to reduce any noise that you do not want.

Image Enhancement: Brightness and contrast adjustments.

Normalization: Standardize the lighting conditions

Resize and Crop: Likewise in shape, same dimension, attention on the significantly paramount parts.

2. Image Processing:

Feature Extraction: This type of algorithm is able to determine what are the edges, textures, colors, or other subtypes of features on an image.

Feature Representation: Extracted features that are being used are turned into suitable formats for analysis like feature vectors.

Post Processing: It improves the output by removing irrelevant regions and smoothing boundaries.

Visualization and Interpretation: Involves overlaying detected objects on images, generating heatmaps, or providing textual descriptions of results.

3. Deep Learning and Neural Networks:

Machine Learning and Deep Learning: Tasks use models that are trained on labeled datasets to learn patterns, including:

Convolutional Neural Networks (CNNs): Effective for image classification and object detection.

Recurrent Neural Network (RNNs): Used for sequential data in videos.

Transformers: Employed for tasks requiring attention mechanisms, like image captioning.

Understanding Core Computer Vision Techniques:

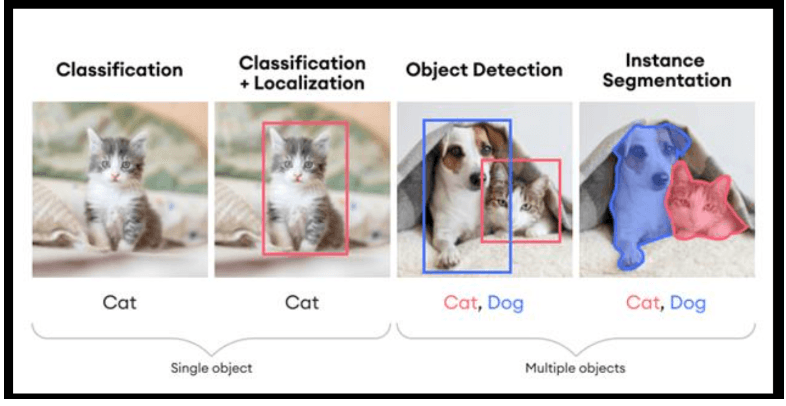

1. Image Classification:

Classification of images is an essential task in computer vision that assigns a label to every image based on its content. It is commonly used in face recognition, medical imaging, and product identification applications. Machines learn to recognize and categorize images into predefined classes by training on huge datasets.

2. Object Detection:

The widening of image classification is necessary for self-driving, surveillance, and retail data analytics. It pinpoints its location using bounding boxes and recognizes the type of object in an image as well.

3. Semantic Segmentation:

Spotting differences between healthy and diseased tissue is difficult which is why semantic segmentation classifies every pixel of each image into a certain tier that creates detailed object maps. Semantic segmentation enables for exact specifications of objects within an image making it useful for applications.

4. Instance Segmentation:

Instance Segmentation is a process that recognizes diverse samples of the same object.

5. Feature Detection and Matching:

In feature detection, finding particular points of interest in an image. In matching, to match those points of interest between different images. This takes place when to find objects or patterns in an image (image recognition), stitch two pictures together based on features (image stitching), or track a point as it moves throughout the frame of an image sequence.

6. Edge Detection:

Edge detection identifies the boundaries of objects within an image, crucial for scene interpretation, image editing, and 3D reconstruction.

7. Motion Analysis and Object Tracking:

Motion analysis studies the movement of objects over time, and object tracking follows an object’s trajectory across video frames. These tasks are commonly used in surveillance, sports analytics, and vehicle navigation.

8. Pose Estimation:

Pose estimation involves predicting the posture or pose of objects or people from images or video, used in sports analysis, animation, and human-computer interaction.

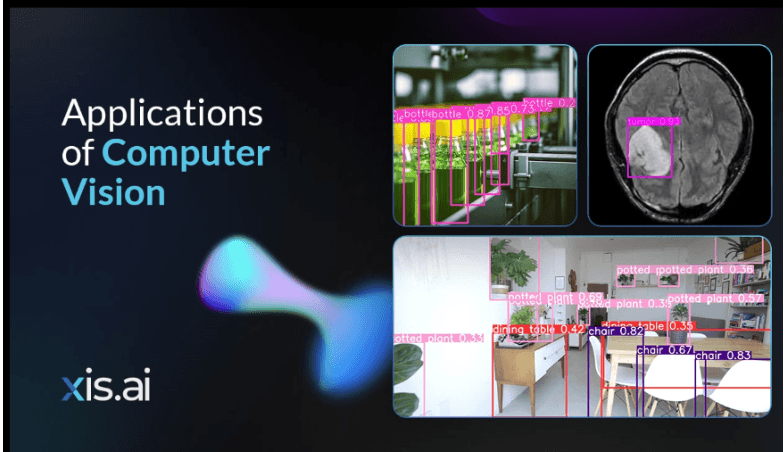

Applications of Computer Vision

Computer vision has become a main focus across different industries. It is known as advanced innovation in artificial intelligence and machine learning. The demand for AI and machine learning technologies is increasing highly, contributing to significant advancements in technology. The rapid progress in computer vision applications continues to drive transformative changes in these sectors, improving processes and expanding the scope of machine capabilities across diverse industries.

Here are some key applications of computer vision across various industries:

Healthcare:

Computer vision is changing healthcare by making diagnostics, surgeries, and patient monitoring better. It improves medical imaging by analyzing X-rays, MRIs, and CT scans to find issues like cancer and neurological disorders early on. During surgeries, computer vision gives real-time visual help and supports robotic procedures, enhancing precision and results. It also allows for remote patient monitoring using sensors to notice small changes in health. Additionally, it helps tailor treatments by analyzing specific disease features and improves orthodontics by making treatment planning and diagnosis more accurate.

Automotive:

In the automotive sector computer vision is very important. It helps in manufacturing defects in vehicles and makes sure to improve the reality and quality.This technology allows features like automatic braking and lane-keeping assistance, making driving safer. It also makes transportation better by helping self-driving trucks navigate and avoid obstacles. It helps manage fleets by tracking where vehicles are and how drivers act. Cameras check road conditions and suggest better routes, making driving safer and more efficient.

Retail and E-Commerce:

Computer vision is changing how people shop and how stores work. In physical stores, it helps keep track of items, so staff know when to refill shelves. It also allows for checkout-free shopping, where customers can grab what they want and leave without waiting in line, as the system automatically sees what they bought. For online shopping, computer vision lets customers find products by uploading pictures and try on clothes or makeup using their own photos or videos.

Security and Surveillance:

The advantage that security and surveillance get from computer vision is that it helps automatically spot and analyze potential threats. Face-recognition technology in public spaces can help schools or airports control the influx of entries and add a layer of security. It can also sense if someone is trying to break into some impassable places, or monitor a crowd in case of something going down and respond fast. It is also reportedly useful for early detection of forest fires and monitoring factory staff's safe practices. All in all, computer vision is not only enhancing safety within our environment but also reducing the constant monitoring needed by a human.

Agriculture:

Computer vision is changing agriculture by enabling farmers to manage their crops and livestock more effectively. Using drones and cameras, farmers can assess crop health, detect pests and diseases, and apply the appropriate amounts of water and fertilizers, resulting in increased yields and reduced environmental impact. It also aids in monitoring livestock for early signs of illness, facilitating prompt treatment. Furthermore, computer vision can identify and target weeds and pests, minimizing the reliance on chemicals. In summary, these technologies enhance the efficiency and sustainability of farming.

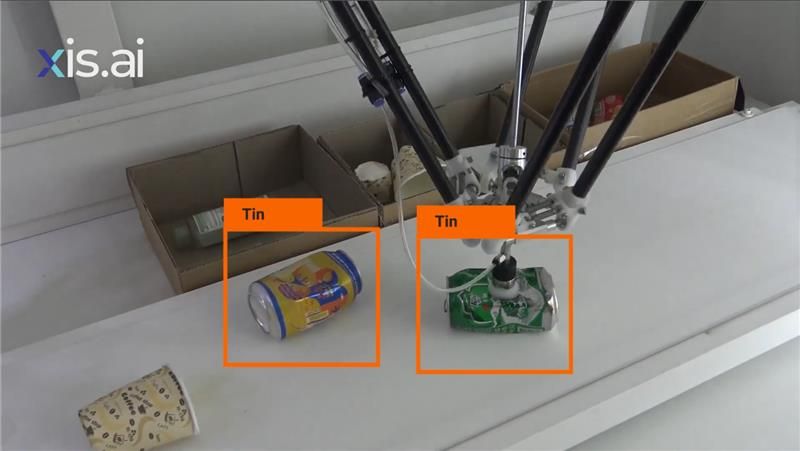

Manufacturing:

In the manufacturing sector computer vision improves quality control and automates processes. Utilizing high-resolution cameras and advanced image analysis, it inspects products on assembly lines in real-time, quickly identifying defects to ensure that only high-quality items are delivered to customers. Moreover, it supports robots in tasks like assembly and packing and efficiency. By analyzing machinery to predict maintenance needs, it helps reduce recall and costs. These innovations make manufacturing more efficient, cost-effective, and scalable, resulting in smarter production systems.

Packaging and Pharmaceuticals:

Computer vision the packaging and pharmaceutical industries by enhancing quality control and boosting efficiency. In packaging, it automatically detects defects like misaligned seals and incorrect labels, ensuring that products adhere to high standards and minimizing the risk of recalls. Additionally, it simplifies inventory management and logistics.

In the pharmaceutical field, computer vision plays a vital role in identifying flaws in products, such as irregular pill shapes and damaged packaging, which is essential for safety. It also accelerates drug discovery by analyzing images to swiftly identify potential compounds.

Conclusion:

Computer vision is transforming various industries by enabling machines to interpret visual data, significantly enhancing efficiency, accuracy, and safety. In manufacturing, it automates quality inspection and guides robotic automation, while in agriculture, it aids in crop monitoring and livestock management. Retail benefits from automated checkouts and visual search, and security uses it to identify threats in public spaces.

As technology advances, the potential of computer vision continues to grow, promising innovative solutions. However, it’s crucial to address ethical concerns like privacy and data security to ensure responsible use. Overall, computer vision is a powerful force that is reshaping our world and paving the way for a smarter and more efficient future.

Comment

0Comments

No comments yet.