The Role of Computer Vision in Automation

Recent Post:

Computer vision has significantly enhanced automation in numerous fields, acting as the "eyes" processing visual information and translating it into useful information. Modern vision systems have become much more sophisticated than their early applications in manufacturing, thanks to the enhanced sensing capability and the power of artificial intelligence technologies. They are now also significantly improving performance in various other industrial applications. By working smoothly with automation, computer vision allows machines to understand and use visual information accurately, simplifying processes and reducing the need for human involvement. This technology is used in quality control in manufacturing, advanced medical diagnostics, and more, using advanced methods to make work faster and more effective. Computer vision is reshaping automated processes to become more innovative, faster, and precise.

Nevertheless, when industries utilize these tools, the following challenges—the system's complexity and data precision—remain to be addressed to exploit their potential fully.

AI-Based Quality Inspection

What is computer vision?

Computer vision is an area of artificial intelligence (AI) that can teach machines to analyze, process, and understand visual data from the surrounding environment. By imitating human vision, it gives automated systems the capability to carry out tasks in the sphere of perception and analysis.

Key aspects of computer vision include:

Image and video analysis

Pattern and object recognition

Real-time decision-making using visual data

This technology enables systems to carry out tasks where the understanding and interpretation of visual information are integral to the system's performance.

Why is Computer Vision Essential in Automation?

Computer vision is an important step toward automation by increasing accuracy, efficiency, and scalability with reduced potential for human intervention. Its key contributions include:

1. Enhancing Accuracy

Eliminates human error through consistent and precise visual analysis.

Example: In the pharmaceutical industry, it guarantees that each product possesses the required high-quality standards.

2. Real-Time Decision Making

Processes images or videos instantly, enabling immediate adjustments.

Example: Automated vehicles recognize roadside signs, traffic barriers, and pedestrians in real-time.

3. Cost and Time Efficiency

Reduces labor costs by automating repetitive visual tasks.

Speeds up processes like inspection and sorting.

4. Scalability

A technique for the large-scale automation of even very complex visual tasks (e.g., defect detection).

Agriculture Drones for Crop Inspection

Components of a Computer Vision System

A typical computer vision system comprises the following elements:

- Camera: Captures visual information.

- Algorithms: Analyze and interpret data.

- Techniques: Depends on the use of 1D, 2D or 3D vision.

- Analysis: Extracts meaningful information from images or videos.

- Output Generation: Produces results for control and decision-making processes.

Facial Recognition

Building Blocks of Computer Vision in Automation

CV is the basis of automation, allowing machines to scan, process, and interpret visual information to make intelligent decisions. The following are the basic elements and hardware that drive computer vision in automated systems.

1. Data Acquisition

Computer vision is based on the first step of visual data acquisition through different camera and sensor modalities:

2D Cameras: Ideal for basic image analysis, such as barcode scanning.

3D Cameras and LiDAR: For depth estimation and spatialization, it is essential for robotics and autonomous vehicles.

Spectral Imaging: Used in agriculture industries for the evaluation of plant health through the analysis of non-visible wavelengths.

High-speed cameras, coupled with optimized sensors, ensure zero frame loss during real-time data acquisition, which is vital for fast-moving systems like assembly lines.

2. Image Preprocessing

Raw visual information is, in general, noisy and of varying quality that has the cause due to light, motion or viewing angle. Preprocessing cleans and standardizes this data for further analysis.

Key steps include:

Noise Reduction: Techniques like Gaussian Blur or Median Filtering smoothen images.

Normalization: Adjusts pixel values to maintain consistent contrast and brightness.

Geometric Transformations: Rescaling, cropping, and rotating images to ensure proper alignment.

To mitigate low latency, GPU acceleration or edge devices have been used to perform the preprocessing idle section without affecting the system.

3. Feature Extraction and Object Detection

Feature extraction allows machines to identify patterns, contours, and objects from visual information.

Traditional Methods:

- SIFT (Scale-Invariant Feature Transform): Identifies features regardless of scaling or rotation.

- HOG (Histogram of Oriented Gradients): Detects objects based on gradient directions.

- Deep Learning Models:

CNNs (Convolutional Neural Networks):Learn hierarchical patterns for automated feature extraction.

YOLO (You Only Look Once): Designed for real-time object detection in automation.

Example: In manufacturing, CNN models identify defects by analyzing surface defects in the products.

4. Image Classification

Object or pattern extraction is then & forwarded by the system to predefined categories. This is achieved using:

- Softmax Layers: Generate multi-class probability distributions.

- Transfer Learning: Trains pre-trained networks, e.g., ResNet or VGG, for specific tasks in a comparatively short time, with a relatively small amount of computational and memory resources.

Highlight: In scenarios with data scarcity, training pre-trained models from scratch is state-of-the-art, ensuring rapid and low-cost deployment.

5. Real-time decision-making:

Automation systems rely on real time (instantaneous) decisions of an image obtained.

This requires:

- Edge Computing: Perform image processing in place on such devices as Nvidia Jetson or Intel Movidius.

- Parallel Processing: Frameworks like TensorFlow or PyTorch, optimized for GPU using CUDA.

Real-time analysis guarantees that automated systems react instantaneously, which enables their use in dynamic situations like autonomous vehicles or robotic manufacturing lines.

Performance Optimization Techniques

- Model Compression

Deploying models on edge devices involves minimizing their size while keeping the accuracy high.

Techniques include:

Pruning: Removing redundant weights in neural networks.

Quantization: Converting high-precision weights to lower-bit formats.

2. Adaptive Thresholding

For real-time image segmentation, adaptive thresholding evolves the threshold in real-time in response to changes in illumination, decreasing false negatives.

3. Pipeline Parallelization

By parallelizing the computation of tasks such as preprocessing and feature extraction, latency can be decreased, and as a consequence, the system can operate at a high frame rate.

Computer Vision Applications

Computer vision is changing many application areas by allowing systems to use visual information and gain valuable insights. Companies in logistics and healthcare are using this technology to improve operations and increase efficiency.

The following are some of the most common computer vision applications in various fields:

- Augmented Reality

Computer vision smoothly integrates virtual objects into the real world, improving user experiences in gaming, training, and design.

- Autonomous Vehicles

Driverless cars use real-time object detection and road analysis to safely and efficiently guide the vehicle.

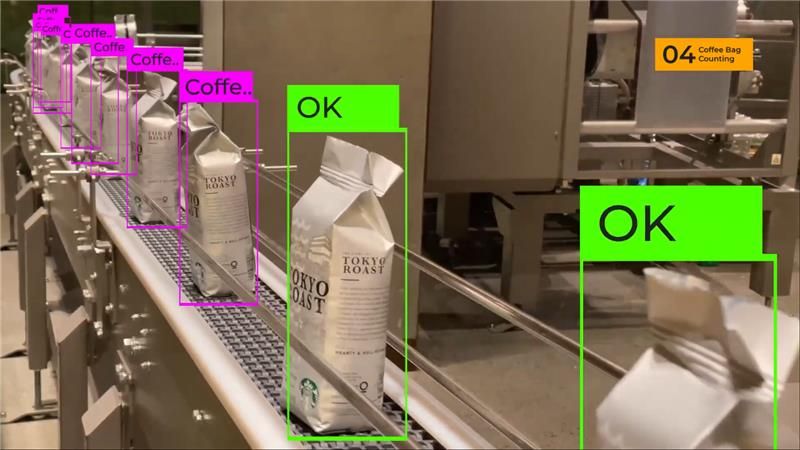

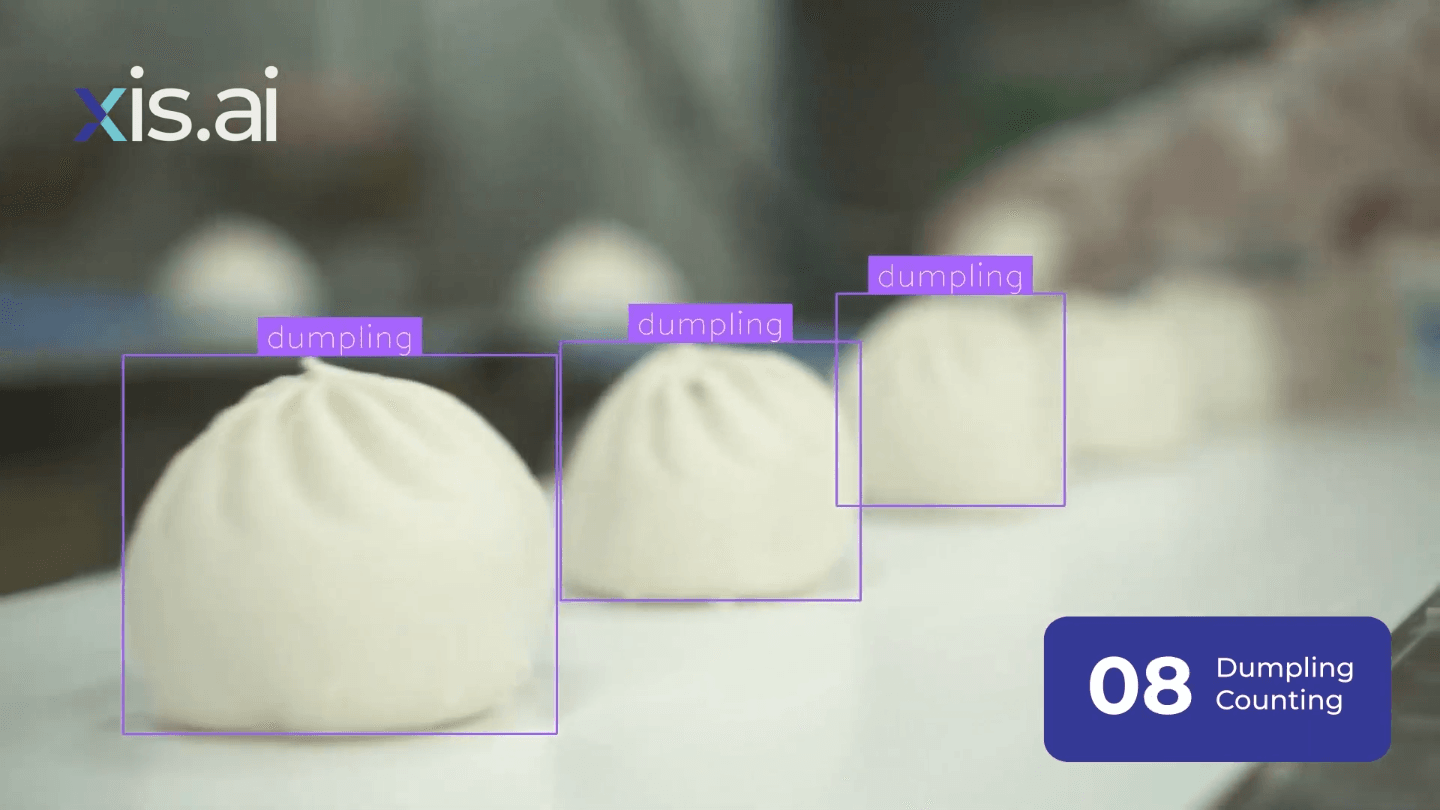

- Manufacturing

Computer vision is used to monitor machines for operational efficiency and ensure quality control by checking machines, products, and packaging on production lines.

- Spatial Analysis

This technology identifies people or objects and tracks their movement across spaces, which can be used for crowd management, retail analysis, and security.

- Healthcare

Computer vision analyzes medical images (e.g., MRIs and X-rays) to help diagnose medical conditions faster and more accurately.

- Agriculture

Using satellites, drones, or aerial imaging, computer vision monitors crop health, detects nutrient shortages, and identifies potential issues.

- Text Extraction

Automated systems can analyze and extract useful information from large amounts of text using computer vision powered by optical character recognition (OCR).

- Facial Recognition: Identifying or verifying individuals based on facial features, widely used in security systems, social media platforms, and customer experience.

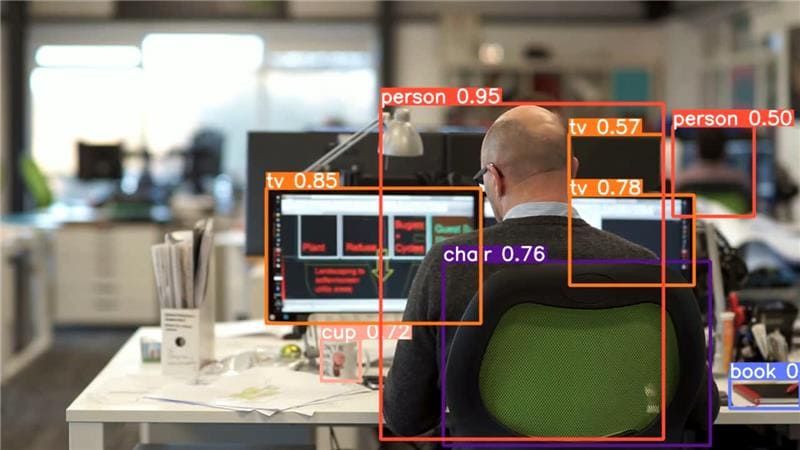

- Object Detection: The field object detection and localization, which is the backbone of autonomous vehicles, surveillance, and manufacturing, since it enables us to know that there "exists something in the image" and its position (location of the object on the whole image) and size (the size of the object in the entire image) is known.

- Medical Imaging: Medical image analysis (e.g., computed tomography (CT scan, radiography, MRI) is used to search for diseases or abnormalities, improve the diagnosis, and perform the treatment plan.

- Gesture Recognition: The recognition of the human body or hand motions in devices or systems for use in interaction, e.g., in games, accessibility devices, and home automation.

- Video Analysis: Video stream processing in real-time for applications (e.g., security surveillance, sports analytics, content moderation) with analytics.

- Robotics: Its scope is agent design, which enables agents to perceive the physical environment in which they operate and perform tasks like navigation, object manipulation, or assembly.

- Retail and Customer Experience: CV for behavioral surveillance of customers, inventory control, and enhanced customer service.

Emerging Trends in Computer Vision for Automation

Computer vision for automation is truly on the verge of a bright future.

- AI Integration: Smarter, adaptive systems that learn and improve over time.

- Edge Computing: Real-time image processing on devices without the need to connect to the cloud.

- Increased Accessibility: Lowered cost and ease of integration for small and medium businesses.

As industries increase their use of computer vision, the technology will provide novel opportunities for automation and innovation.

Conclusion:

Integrating computer vision into automation systems is a technical marvel, combining advancements in AI, hardware, and engineering. From the feature extraction algorithms to the real-time decision-making architectures, they play a key role in turning the visual inputs into executable automation flow tasks.

Computer vision works without errors, speeds up, and allows real-time decision-making. Computer vision is a very versatile and disruptive technology, ranging from quality control and handling in manufacturing plants to clinical applications, such as sophisticated diagnosis.

Machines will continue to advance the frontiers of automation innovation by employing the efficiency and accuracy of an expanding neural network, the power of edge computing, and systems engineering. The advancement of this technology has the potential to drive new frontiers in automation and productivity across different fields.

Comment

0Comments

No comments yet.